Case Study: Using AI in a Lean Marketing Machine

How a Small Marketing Team Used AI - and Better Data Habits - to Understand What Drives Results

Bloom & Nest Home Goods (a fictionalized example inspired by real teams) is a small online brand selling seasonal home and fragrance products, including pumpkin spice diffusion oils, hand-blown glass ornaments, and a winter hearth candle trio.

They were sending frequent email campaigns, but couldn’t answer a foundational question:

Which email themes reliably drive engagement and sales?

This case study shows how Bloom & Nest applied the core skills we’ve been teaching to get clarity: framing the problem, preparing structured inputs, evaluating AI results with human judgment, and adding small governance habits.

There’s a quick start guide at the end with some steps to get you started incorporating AI into marketing analysis on your team, regardless of team size.

Situation: Life As a Lean Marketing Team

Bloom & Nest’s Marketing team’s environment will be familiar to anyone who’s worked in a small, fast-moving business:

Campaign names varied widely (“Fall Drop,” “Cozy Update,” “wk3/Oct”).

Some emails were labeled with their theme (Launch, Seasonal, Care Tips); others had no labels at all.

Tracking links (so the team could see what happened after someone clicked) were inconsistent.

Older campaigns came from multiple platforms and didn’t follow the same naming or tagging conventions.

Drafts lived in a mix of Google Drive, Gmail, and the email/SMS platform.

Product descriptions differed in tone, structure, and detail.

This made it hard for the team to see patterns or explain why some emails worked better than others. The founder believed that seasonal content was the big driver. The marketing manager wasn’t convinced. The way their content and data were organized made it nearly impossible to answer the question with confidence.

Most small teams have a version of this: scattered inputs, inconsistent labels, and no single place that shows the whole story.

Challenge: Use AI to Uplevel Email Performance

When Bloom & Nest first tried using AI to “analyze our marketing,” the outputs looked polished, but they weren’t reliable:

AI misinterpreted product names (e.g., flagged “Morning Matcha” as wellness content).

It confidently referenced a “Black Friday 2021 sale” that never happened.

Some performance numbers didn’t match the team’s own metrics.

Recommendations leaned on generic industry advice instead of the brand’s real behavior.

Nothing was “wrong” with the AI itself. The lack of useful insight came from how the team’s own content and data were organized.

Before they could get trustworthy analysis, Bloom & Nest needed to give the AI clarity and structure: a focused question and well-prepared inputs.

Approach: Getting AI to Add Real Value

The team approached this project using the practical framework from our AI at Work series: start with a clear question, organize the inputs, then review the results with human judgment before refining the process.

Step 1: Frame the Question Clearly

Instead of asking AI to “analyze all our marketing,” the team narrowed the problem to something specific and answerable:

“Summarize our recent email campaigns and group them into consistent themes we define, so we can identify which types of content drive engagement and conversions.”

A well-defined question set boundaries around what AI should do (summaries, classifications, basic analysis) and what remained human responsibility (strategy, interpretation, and decisions).

It also established the right success criteria:

Accurate summaries

Correct classification into the chosen themes

Insights that reflect actual performance, not AI’s assumptions about marketing

This reframing alone made the AI process more predictable and easier to evaluate.

Step 2: Prepare Better Inputs

Next, the team improved the information they were feeding into the AI. This wasn’t a big cleanup project, just a focused, lightweight effort to make the inputs clearer and more consistent.

Create Consistent Campaign Names

The team renamed the last 18 months of emails using a simple format: date + category + topic.

This gave the AI (and the team) a predictable foundation: at a glance they could see when an email was sent and what it was about.

Define a Small Set of Content Themes

Bloom & Nest agreed on six clear, durable categories: Launch, Restock, Care Tips, Behind the Scenes, Seasonal, Promotion.

Short, one-sentence definitions ensured everyone - including AI - knew what each category meant.

Clean Up Missing or Inconsistent Metadata

They filled in missing dates, corrected a few major mislabels, and retired duplicate tags (e.g., “fall,” “autumn,” “fall-promo” all became “Seasonal”).

Perfection wasn’t the goal - clarity was. These steps made the inputs understandable to both the AI and the team.

Give Product and Brand Context

To prevent AI from drifting into generic marketing language, the team provided information about the company and a short description of Bloom & Nest’s target audience.

Product descriptions were scattered, so the team pulled them into one file. They used AI to create first-pass summaries of each product’s scent, tone, and purpose, and then manually corrected the descriptions for accuracy. They loaded the AI with the final product catalogue.

Both documents reduced noise in later analysis; the team decided to maintain this documentation going forward.

Prepare the Right Metrics

Content alone wasn’t enough. The AI also needed structured performance data, so the team pulled together a small set of useful metrics that reflected what they cared most about:

Open rate (baseline engagement)

Click-through rate (actual interest in content)

Click-to-purchase rate or sales per send (conversion signal)

List growth or unsubscribes (signal of fatigue or mismatch)

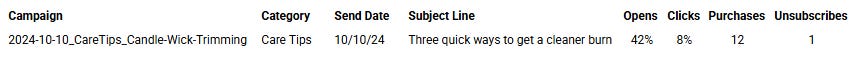

They organized these in a simple, consistent structure with one row per campaign with the same fields each time. Below is an example row; the full table followed this format for every campaign:

How They Told AI to Use the Metrics

AI does better when you tell it exactly how the numbers matter. Bloom & Nest used prompts like:

“Here are the performance metrics we track. When looking for patterns, prioritize click-through rate and purchases. Use open rate as context, not the main signal.”

“Compare categories using averages, ranges, and notable outliers. Do not generalize beyond the data provided.”

This kept the model grounded in what the team valued instead of falling back on generic marketing logic.

How to Ask AI to Analyze the Data

Once the table was prepared, they used prompts such as:

“Using the table provided, identify which content categories perform best across opens, clicks, and purchases. Show your reasoning step-by-step, including which metrics influenced your conclusion.”

And:

“Highlight any campaigns that deviate significantly from their category’s typical performance and propose possible reasons, based only on the content summaries.”

Giving AI structured metrics and explaining how to use them reduced misinterpretations and made the performance analysis reliable. It also helped the team push past vague impressions (“seasonal content does well”) toward evidence they could act on.

Step 3: Evaluate Outputs - Trust, Adapt, or Toss

With clearer inputs, the team tested how well the AI followed their instructions and whether the outputs could be used in real analysis. They used three prompts:

Prompt 1: Summaries and classification

“Here are 20 past Bloom & Nest newsletters with their dates and topics. Summarize each in one sentence and classify it using the six categories provided.”

This tested comprehension. If the AI couldn’t summarize or classify correctly, deeper analysis would not be reliable.

Prompt 2: Identify performance patterns

“Using these summaries and the performance metrics (opens, clicks, purchases), identify which content themes perform best. Show your reasoning step-by-step.”

The “show your reasoning” instruction exposed the logic behind each conclusion, making review faster and more transparent.

Prompt 3: Flag unsupported claims

“Point out any conclusions that cannot be directly traced to the provided data.”

This limited AI’s tendency to generalize or rely on general marketing patterns.

How the Team Labeled the Outputs

Once the inputs and metrics were ready, the team could finally test how well AI understood the task. Each AI response was labeled as Trust, Adapt, or Toss, creating a shared vocabulary for evaluating what the AI produced.

Trust (accurate and usable)

AI correctly identified that Care Tips emails had roughly twice the click-through rate of new-scent launches. The numbers matched internal analytics, and the explanation of the AI’s reasoning process was sound.

Adapt (directionally correct but needing edits)

AI sometimes misclassified campaigns; for example, it treated a restock announcement as a new product launch because of the language used. The team clarified the difference between the two categories, added clearer examples, and re-ran the prompt.The classification corrected on the next run.

Toss (unsupported or incorrect)

Some outputs were confidently wrong, like referencing a “Black Friday 2021” sale that never happened. Since this was a testing phase, the team excluded these results from the process. If an output couldn’t be traced back to real data, it wasn’t considered further.

The team added a brief note explaining why the output failed (AI filling in a pattern that wasn’t there) and updated their instructions for the AI. On the next test run, the error didn’t reappear. That gave them confidence these issues wouldn’t slip into real analysis later.

Step 4: Maintain a Simple Improvement Loop

To keep the workflow stable and transparent, the team documented:

The prompts they used

The inputs supplied

Trust/Adapt/Toss decisions

Notes on misunderstandings

Adjustments to examples or definitions

They also saved a small set of representative emails (10–12 campaigns that produced clean results) as a reference set for re-running prompts and making sure the AI stayed consistent over time.

This wasn’t heavy governance; it was just enough structure to keep the work consistent.

Multi-faceted Results

Identified Business-Driving Performance Patterns

With a clear question, better inputs, and a consistent review process, the insights became easy to see and easier to trust.

“Care Tips” and “Behind the Scenes” emails consistently performed strongest in terms of open rates and clicks.

Restock announcements converted to a sale better than brand-new product launches.

Seasonal content mattered, but was not the primary driver the Founder assumed.

Cut Planning Time

Quarterly planning time dropped by more than half because the team no longer had to manually sift through months of campaigns for content or performance.

Understanding performance by content type also allowed them to set a more predictable content calendar.

Clearer Team Focus

The team shifted away from seasonal storytelling and focused on the content types that actually drove engagement.

Stronger Metadata and Analysis

Simple naming conventions and ongoing product documentation now support every future analysis, whether human or AI.

These results mattered, but the more durable change was in how the team approached the work itself.

The Learning Went Beyond ‘How to Use AI’

As they moved through the project, the team sharpened habits that support good decisions in any organization. Clarifying the question, organizing the inputs, reviewing results carefully, and building a simple workflow strengthened the practices that lead to clearer, more confident decisions.

Here’s what their experience shows:

Clarity moves work forward.

Bloom & Nest made real progress the moment they shifted from “analyze our marketing” to a specific, answerable question. That clarity simplified every step that followed.

Clean inputs create shared understanding.

Organizing information isn’t administrative noise. It’s what lets teams see the same picture.Clear names, shared categories, and consolidated notes reduced confusion and made the insights possible.

People’s judgment is still required.

Tools can summarize and sort, but they can’t decide which findings matter. The Trust/Adapt/Toss decisions helped the team practice making thoughtful, evidence-based choices.

A little structure goes a long way.

They didn’t need heavy processes but just enough structure to make the work predictable and repeatable: simple conventions for naming campaigns, defining themes, and reusing examples where they fit.

Better structure leads to better choices.

By organizing their information and reviewing outputs deliberately, Bloom & Nest finally saw what was truly working and could make decisions with more confidence.

Habits matter more than tools.

Whether a team uses Klaviyo or Mailchimp, Shopify or Squarespace, the underlying practices stay the same: ask a good question, prepare clear inputs, and review outputs with care. Good habits travel with you, no matter the platform.

Start Now: How to Do This With Your Team

If you want to put these ideas into practice, you don’t need a full audit or a big project. A short, focused exercise can help you see the same kinds of patterns Bloom & Nest uncovered and build the habits that make analysis easier over time.

Here’s a simple way to get started:

Pull 10 - 15 recent emails or recurring updates.

Don’t overthink which ones - recent, representative samples are enough. Don’t have marketing campaigns? Use email newsletters or product updates.Create 4 - 7 clear content categories.

Keep them simple and mutually distinct.Standardize names (date + category + topic).

This alone will clarify patterns you couldn’t see before.Ask AI to summarize and classify them.

Use short, direct instructions.Apply Trust/Adapt/Toss.

Identify what you can use as-is, what needs refinement, and what to ignore.Record insights and update your categories for the next round.

This turns one exercise into a habit.

This exercise gives you a sharper view of the patterns behind your content: what you send, how often you send it, and which themes resonate.

Do you have a case study to share? Tell us about it!

fabulous case study. Any small operator using either email or social (actionable) marketing should be able to follow this step by step. Biggest time sink = consistent, significant data labeling. Pretty easy to get an intern for a short period to do this under the marketing persons supervision.